At the tech conference Vortech.by/Vidispine next week in Stockholm, our CEO Jonas Sandberg will give a talk about how Accurate Player can be used for metadata extraction and enrichment for quality control and compliance. In this article, we explore conceptual themes around streamlining manual metadata using the power of ML/AI in the cloud.

At Accurate Player, we use Google Drive to create, collaborate and manage all our documents and files for easy access and retrieval. I think we have a company specific structured folder system to organize our documents and files. Yet from time to time I struggle to find folders or files I am looking for. Even with the powerful search capabilities on offer, I end up spending a good deal of time searching before finding the file. Most of the time I just give up and ask a colleague who is the document owner to point me in the right direction or just share the link with me.

I have noticed that, usually, the person who created a particular folder or system is usually the most adept at locating files within that system. This is because each individual has their own personal approach to taxonomy based on their own experiences and learning. If this can be a challenge in a relatively small team like mine, you can only imagine the challenge when one is faced with hundreds and thousands of files in larger and more complex organizations such as media and broadcast companies.

This is one of the reasons why metadata is the common denominator that is pivotal to making sense of myriad versions of media within the media supply chain. Yet many organizations approach metadata management and taxonomy in a very similar way to the anecdote above i.e. it is only as good as the individual/individuals behind the process. The challenge is further compounded when organizations choose off-the-shelf products to extract and manage their metadata, much like we expect Google Drives search function to magically help us find the files we have created.

Is there a way out of this labyrinth? Thankfully, yes. With the advent of cloud-native technologies and machine learning, there may be better ways to build scalable and reliable metadata extraction and management tools. This approach makes it possible to build tools that uniquely reflect the needs of the organization or team while doing away with the individual idiosyncrasies that creep in due to human involvement. The key is to leverage microservices that allow you to build, test, deploy and scale the process iteratively.

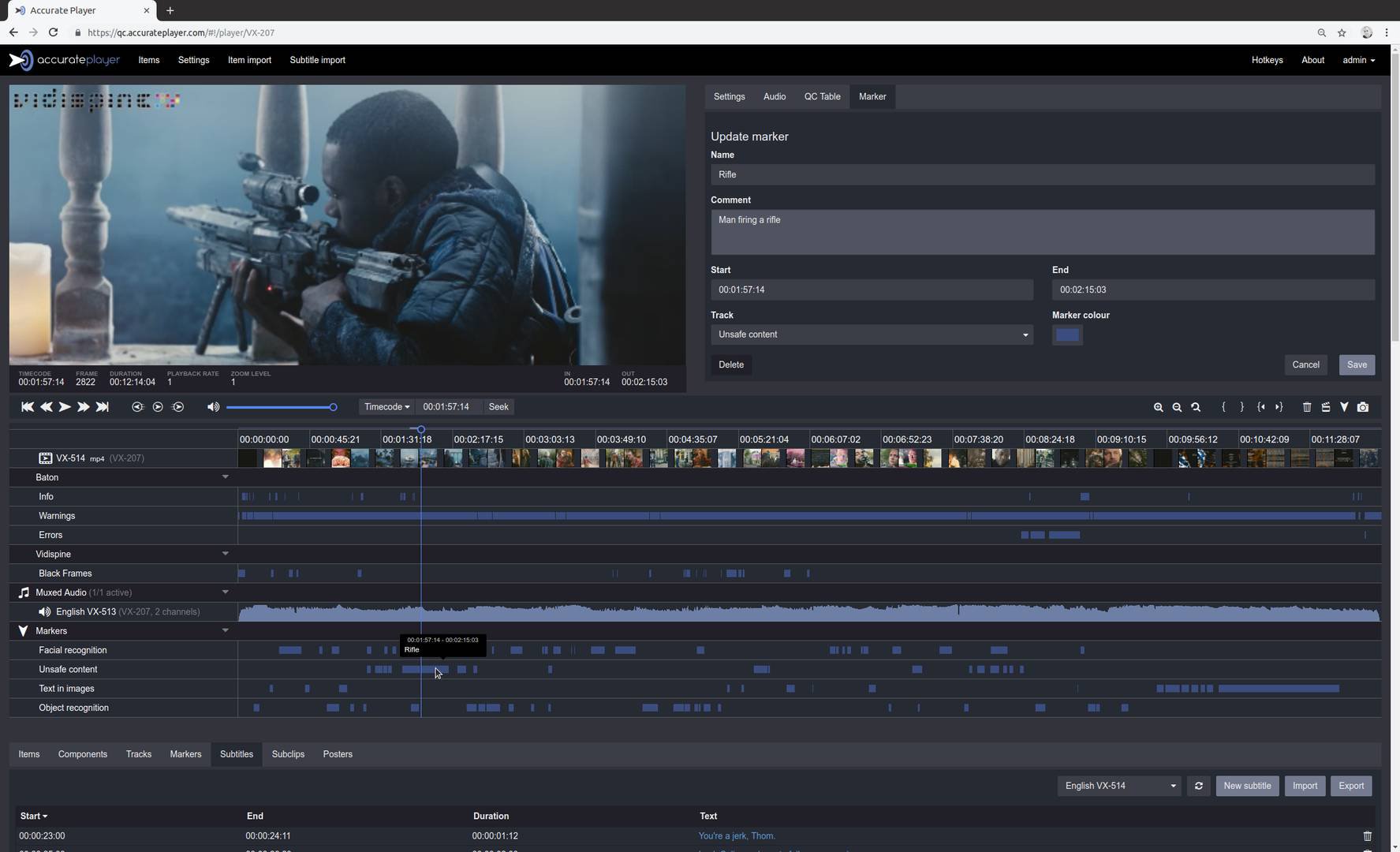

A possible way in which this could be achieved is to create a framework around how manual metadata is categorized across different workflows. An ML/AI model could then be based on this framework, and trained to spot any deviations on manually inputted metadata. These deviations are flagged by the model and a human can QA, correct and tweak the model to conform to metadata guidelines prescribed in the framework. Once the model can perform at a high confidence level, it is battle-tested in a full-fledged workflow. Repeat the process for each step of the media supply chain and over time you should have a highly accurate, repeatable metadata conforming model with minimal human QA. Of course, it's important to review both the model and the data from time to time when there are changes or additions to the workflow. The intention is also to reduce manual intervention so that your can focus on more creative or value-adding tasks. I see a great potential in this approach helping manual QC operators reduce errors while conforming to the organizations metadata guidelines.

Will we see complete hands off metadata conform in the near future? Your guess is as good as mine. But this approach does provide a glimmer of hope for the future.

This article was written by Dinesh. Dinesh works with Sales & Marketing at Accurate Player & Codemill. He can be reached at dinesh.damodaran@accurateplayer.com